Lessons from the ACC 2021 Meeting

Since Stop and Think is about evidence, this review will focus more on the science of newsmaker studies.

The virtual American College of Cardiology met this month. What follows is a brief recap—with links for further reading.

The Clear Winner

The best study at ACC was a trial of surgical left atrial appendage closure called LAAOS III. Led by Canadian heart surgeon Richard Whitlock, the trial randomized patients with atrial fibrillation (AF) who were going to have heart surgery for other reasons (bypass or valvular disease) to closure of the appendage or no closure. The primary outcome measure was stroke.

To my surprise, patients who had their appendage closed had significantly fewer strokes than those who did not. The add-on procedure resulted in no harm.

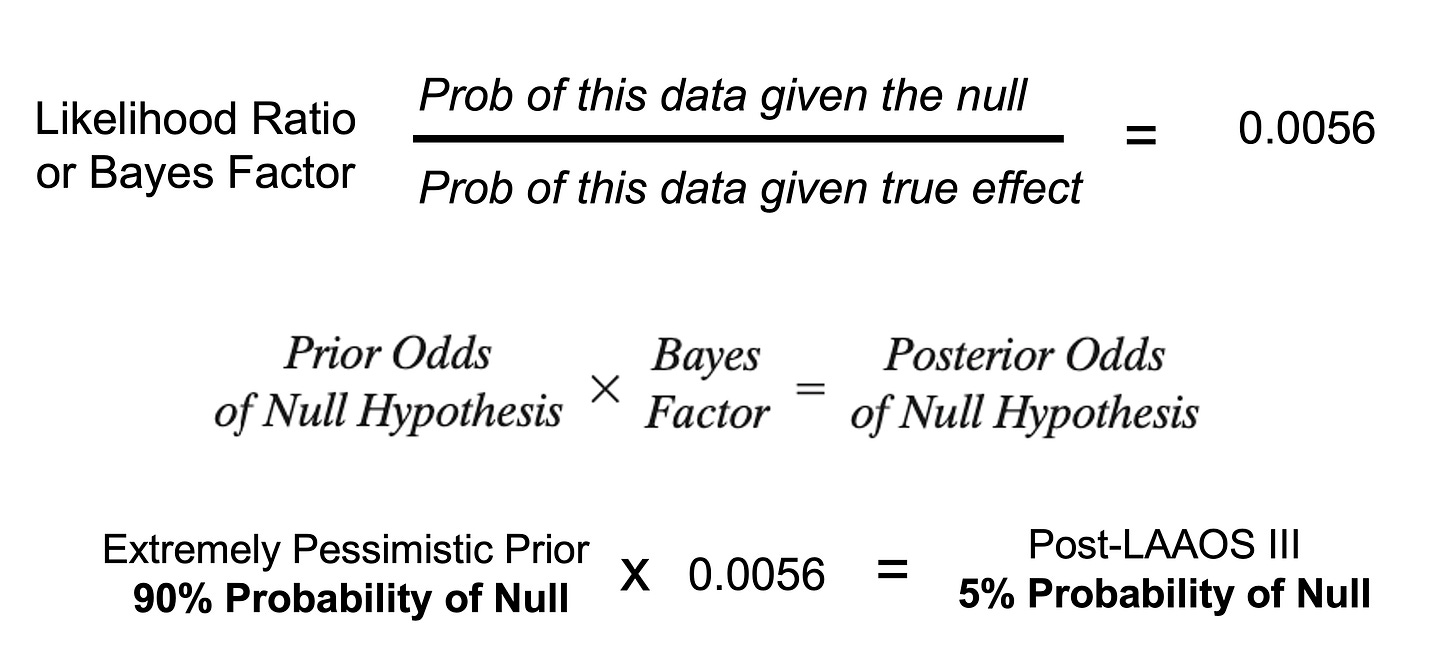

Since I am a grandfather, and love watching my grandkids use Bayes thereon to learn, I like to think of trials in Bayesian terms: how does the data update my prior beliefs?

Well, LAAAOS III results were so strong that even if you were pessimistic, I was, the trial converts you to an enthusiast. See picture:

When I send a patient with AF to surgery, I will ask the surgeon to cut off the appendage in a way that was done in LAAOS III.

My column on this trial is Eight Things to Know About the Surgical Left Atrial Appendage Occlusion (LAAOS III) Trial (free with email).

LAAOS III is exemplary because the trialists asked a simple question, conducted the trial with minimal bias and measured an important outcome—stroke.

If only more medical science was like this.

A Howler Study

One of the best ways to learn about medical evidence is to study the howlers—a term I give to terribly flawed studies.

At ACC, during a “featured clinical research” session, a report on the one-year results of the Watchman registry serves as an example of how not to conduct and present clinical research.

Watchman is a device that plugs the left atrial appendage from the inside. It tries to do what the surgeons did in LAAOS III, without the surgery. This technique has been controversial since its inception. I’ve been a vocal critic of the procedure; but let’s leave the history aside and focus on how the current study failed.

The Watchman registry purports to show that patients who had this procedure had a 77% reduction in stroke compared to untreated patients.

Our neutral Martian would assume that the only way you would know that a treatment reduced stroke by 77% would be if you compared two randomized groups—one who got the device and the other who did not. That is because randomization (mostly) balances out everything but the procedure.

But that’s not what happened. Instead, the researchers tallied the stroke rate of those who got the device (1.5%) and compared that to an estimate in hypothetical patients who were left untreated (6.2%).

This is an abomination of science—for four reasons.

The stroke rate of hypothetical patients is unknowable

After a Watchman, patients receive anticoagulant drugs for weeks to months and that would depress stroke rates

The researchers didn’t have follow-up data on 1/3rd of their patients

You can’t reliably compare two groups of patients that are not randomized—because they may differ in other ways that affect outcomes

Registry data is fine for telling us how many procedures are being done, who is doing them, and what complications are occurring. But they cannot be used to infer causality.

Our neutral Martian may wonder why a scientific organization like the ACC allows such howlers.

I do not know, but it is hardly cynical to worry that the fact that the makers of Watchman provide strong financial support for the organization and its meetings played a role.

Beware of Spin

Another way to learn about medical evidence is study the technique of spin.

Spin is language that distracts from the primary outcome of an experiment.

In this case, the experiment was a study in which a drug called sacubitril/valsartan, known as Entresto, was compared to an old stand-by drug, ramipril, for the treatment of patients who just had a heart attack that was bad enough to weaken the heart.

We learn in high school that before experiments scientists must decide what outcome they will measure. This is important to prevent bias.

In the trial PARADISE-MI, the primary outcome was time to first occurrence of death from cardiac causes or heart failure—a common measure in cardiology.

Here is a screenshot of PARADISE-MI on clinicaltrials.gov, a website where scientists register their experiments before the results are known.

PARADISE-MI found that sac/val reduced the primary outcome by about 0.7 events per 100-patients years. That small difference did not reach an accepted statistical threshold, which means that if you assumed no difference in the two drugs, this data would not be that surprising, and could be due to chance alone.

The neutral Martian would say—crap, the new drug did not outperform the inexpensive old one; but at least we learned something important, and these patients can now be treated with a less costly drug.

But that is not what happened.

Instead, during the presentation, the influential scientist presented two other outcome measures that looked more favorable to sacubitril/valsartan. He, of course, said these analyses were “hypothesis-generating,” but then had as his second conclusion bullet point (emphasis mine):

Pre-specified observations of reductions in both the investigator reports of the primary composite as well as in the total (recurrent) adjudicated events support incremental clinical benefits of sacubitril/valsartan.”

This is classic spin because it language that detracts from the nonsignificant primary endpoint. And it worked.

I spoke with an academic cardiologist yesterday and she said that at an ACC wrap-up presentation at her center, young learners were swayed by the spin. They did not see PARADISE-MI as a nonsignificant trial.

My column on this trial is titled: PARADISE-MI Makes Me Question the Benefits of Sacubitril/Valsartan.

There was a lot more news from ACC, but since I am at 900 words, I will link to other sources.

Renal denervation is a procedure promoted for treating hypertension. Since high blood pressure is so common, there is a lot of hope…and hype. My column is titled: Renal Denervation for Resistant Hypertension Is Not Back on Track

Oodles of data link alcohol and AF. A study from ACC further strengthens that association. Here is my interview with the senior scientist Greg Marcus.

And for a detailed recap of many other ACC studies, take a listen to This Week in Cardiology—a weekly podcast I do for theheart.org | Medscape Cardiology.